- 작성일

- 2025.05.22

- 수정일

- 2025.05.23

- 작성자

- 양우석

- 조회수

- 95

졸업과제 관련해서 팀원을 구하기 위해 글을 드립니다.

졸업 과제를 해야 하는데요 . 같이 할 두분을 찾습니다.

졸업 과제를 해야 하는데요 . 같이 할 두분을 찾습니다.

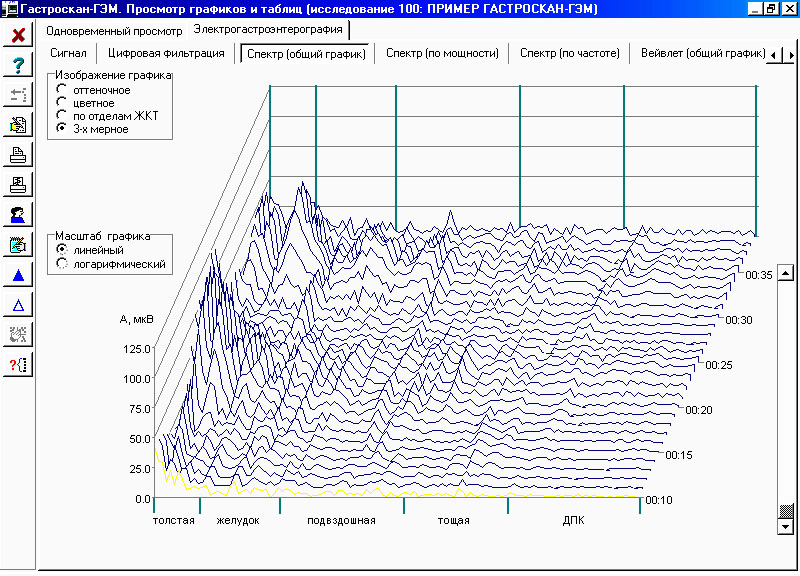

This screenshot is from a Russian medical software called Гастроскан-ГЭМ (GastroScan-GEM), which is used for analyzing electrogastrography (EGG) data―recordings of the electrical activity of the gastrointestinal (GI) tract.

Description of the Interface

Tabs at the top:

"Сигнал" (Signal)

"Цифровая фильтрация" (Digital Filtering)

"Спектр (общий график)" (Spectrum - General Graph)

"Спектр (по мощности)" (Spectrum - Power)

"Спектр (по частоте)" (Spectrum - Frequency)

"Вейвлет (общий график)" (Wavelet - General Graph)

Graph Type Options (left panel):

Shaded

Colored

By GI sections

3D

Graph Scale Options:

Linear

Logarithmic

Graph Interpretation

3D spectral plot shows amplitude (A, ?V) vs time vs GI tract section.

X-axis (bottom) labels GI tract parts:

толстая (colon)

желудок (stomach)

подвздошная (ileum)

тощая (jejunum)

ДПК (duodenum)

Y-axis: Electrical activity amplitude (?V).

Z-axis: Time in minutes (from 00:10 to 00:35).

Key Observations

Peak activity appears early in the time series (~00:10?00:15) and is most pronounced in the stomach and colon regions.

The signal diminishes over time and appears more uniform in the latter GI regions (jejunum, duodenum).

The yellow baseline likely represents a noise floor or reference value.

Purpose

This visualization helps in evaluating:

Motility disorders

Gastroparesis

Postoperative ileus

GI myoelectrical activity in various gut segments."Make a gut pop".

제 생각을 담은 파일 하나인데요. 한 번 공유 부탁드려요. 그리고 학습목표 다양한 설계입니다. 앞으로 해나갈 일들.

voribob@naver.com 입니다

A convertible test, in the context of deep learning, likely refers to a testing methodology where the tests themselves or the testing process can be dynamically adapted, transformed, or "converted" based on the evolving insights from deep learning models. This could involve generating new test cases, prioritizing existing ones, or even reconfiguring the test environment.

Here's a revised research plan for "Deep Learning Method Research for Convertible Test Making," keeping in mind the current advancements and challenges in the field:

Revised Research Plan: Deep Learning Methods for Convertible Test Making

1. Introduction and Background

Problem Statement: Traditional software testing is often manual, time-consuming, and struggles to keep pace with the rapid development and complexity of modern software systems, especially those incorporating AI/ML components. Existing automated testing often relies on predefined test cases, which can be rigid and may not effectively cover emergent behaviors or edge cases. The concept of "convertible tests" addresses this by proposing a dynamic and adaptive testing approach driven by deep learning.

Motivation: Deep learning offers powerful capabilities in pattern recognition, anomaly detection, and generative tasks, making it a promising avenue for revolutionizing software testing. By leveraging deep learning, we aim to create a testing framework that can intelligently evolve its test suite, providing more comprehensive coverage and earlier defect detection.

Research Questions:

How can deep learning models be effectively trained to generate novel, diverse, and high-quality test cases for various software systems, particularly for systems with dynamic and complex behaviors?

What deep learning architectures and techniques are most suitable for analyzing system logs, code changes, and user behavior to inform the "convertibility" of tests (e.g., test case prioritization, modification, or generation)?

How can the interpretability and explainability of deep learning models in test generation be improved to foster trust and facilitate debugging for developers?

What metrics are most appropriate for evaluating the effectiveness and efficiency of deep-learning-driven convertible tests compared to traditional testing methods?

Can a practical framework be developed to integrate deep learning for convertible test making into existing Continuous Integration/Continuous Delivery (CI/CD) pipelines?

Scope: This research will focus on exploring deep learning methods for automated test case generation, test suite optimization (prioritization, selection, minimization), and dynamic test adaptation. While the broad concept of "convertible tests" could extend to test environment adaptation, this research will primarily concentrate on the test case and test suite aspects.

2. Literature Review

Automated Test Case Generation: Review existing deep learning approaches for test case generation, including:

Generative Adversarial Networks (GANs): For creating diverse and realistic test inputs.

Recurrent Neural Networks (RNNs) and Transformers: For sequential data analysis (e.g., user interaction logs, API call sequences) to predict and generate test cases.

Reinforcement Learning (RL): For exploratory testing, where an agent learns to navigate the system to uncover new and untested paths or identify critical states.

Test Suite Optimization: Analyze deep learning applications in:

Test Case Prioritization: Using DL to predict fault-prone areas or prioritize test cases based on historical defect data and code changes.

Test Suite Minimization/Selection: Identifying redundant or less effective test cases to reduce testing time and resources.

Challenges in Deep Learning for Testing: Address known limitations such as:

Data Dependency: The need for large volumes of high-quality, labeled training data.

Interpretability and Explainability: The "black-box" nature of many deep learning models and its impact on understanding generated tests.

Generalizability: Ensuring models can perform well on unseen or out-of-distribution data.

Computational Resources: The demand for significant computational power for training and inference.

Existing Frameworks and Tools: Survey current tools and frameworks that incorporate AI/ML into software testing.

3. Methodology

This research will adopt a mixed-methods approach, combining theoretical exploration with practical implementation and empirical evaluation.

3.1. Data Collection and Preprocessing

Sources: Gather diverse datasets including:

Historical defect data and bug reports.

Code repositories (source code, commit history).

System logs and user interaction traces.

Requirements documents and user stories (for natural language processing).

Preprocessing: Develop robust techniques for:

Feature Engineering: Extracting relevant features from code, logs, and other data sources.

Data Labeling/Annotation: Where necessary, manually or semi-automatically labeling data for supervised learning tasks (e.g., identifying fault-revealing test cases).

Data Augmentation: Techniques to expand limited datasets.

3.2. Deep Learning Model Selection and Development

Test Case Generation:

Generative Models: Investigate and adapt GANs or Variational Autoencoders (VAEs) for generating test inputs, focusing on diversity and validity. Explore conditional generation to target specific functionalities or fault types.

Sequence-to-Sequence Models (Transformers/LSTMs): For generating test scripts or sequences of actions based on requirements or system states.

Reinforcement Learning (e.g., Deep Q-Networks, Proximal Policy Optimization): For active learning and exploratory testing, where the model learns optimal testing strategies by interacting with the system under test.

Test Suite Optimization:

Classification/Regression Models: Using CNNs, RNNs, or Transformers to predict the fault-revealing potential of test cases, enabling prioritization.

Clustering Algorithms (combined with DL for feature extraction): For identifying redundant test cases for minimization.

Hybrid Approaches: Explore combining deep learning with traditional search-based software testing (SBST) or rule-based methods to leverage the strengths of both.

3.3. Convertible Test Mechanism Design

Dynamic Adaptation: Design a mechanism where the deep learning models continuously learn from new data (e.g., test execution results, code changes) and automatically "convert" or refine the test suite. This could involve:

Triggering Retraining: Automatically initiating model retraining when significant code changes or new defect patterns are observed.

Adaptive Test Selection/Prioritization: Dynamically adjusting the set of tests to be executed based on the current risk profile of the software.

On-the-Fly Test Case Generation: Generating new test cases in response to unexpected system behavior or low coverage in specific areas.

Feedback Loop: Establish a robust feedback loop from test execution results back to the deep learning models to enable continuous improvement.

3.4. Evaluation Framework

Metrics: Define comprehensive evaluation metrics:

Test Coverage: Code coverage (statement, branch, path), mutation coverage.

Fault Detection Rate: Number of unique defects identified.

Test Suite Size/Execution Time: Efficiency metrics.

Test Case Diversity and Novelty: To assess the ability of generative models.

Interpretability: Quantify the explainability of generated tests (e.g., using LIME, SHAP).

Developer Satisfaction/Usability: Through surveys or qualitative analysis.

Baselines: Compare the proposed deep learning approaches against:

Manual testing.

Traditional automated testing frameworks.

State-of-the-art non-deep learning methods for test generation and optimization.

Case Studies: Apply the developed methods to real-world software projects (open-source or internal if possible) to demonstrate their practical applicability and effectiveness.

4. Implementation and Experimentation

Programming Languages and Frameworks: Python with deep learning libraries such as TensorFlow, PyTorch.

Tools: Utilize existing testing frameworks (e.g., Selenium, JUnit, Pytest) for integration and execution.

Experimental Setup:

Define clear experimental protocols for training, validation, and testing of deep learning models.

Establish controlled environments for conducting comparative studies.

Address computational resource requirements and explore strategies for efficient model deployment (e.g., model compression, distributed training).

5. Expected Outcomes and Contributions

A novel framework for "convertible test making" driven by deep learning.

Demonstrably improved test coverage and fault detection rates compared to traditional methods.

Reduced manual effort and time spent on test case design and maintenance.

Insights into the most effective deep learning architectures and techniques for different aspects of software testing.

Strategies for enhancing the interpretability and explainability of deep-learning-generated tests.

Empirical evidence of the practical benefits and challenges of integrating deep learning into software testing workflows.

Open-source tools or libraries to facilitate the adoption of deep learning in automated testing.

6. Timeline (Example)

Months 1-3: In-depth literature review, data collection strategy, initial dataset preparation.

Months 4-7: Deep learning model selection, architecture design, initial model training for test case generation.

Months 8-11: Integration of test suite optimization techniques, development of convertible test mechanism, initial experimental setup.

Months 12-15: Extensive experimentation, data analysis, performance evaluation, iterative refinement of models and framework.

Months 16-18: Case studies on real-world projects, interpretability analysis, results interpretation, and report writing.

Months 19-21: Thesis writing/publication preparation, dissemination of results.

7. Resources Required

Computational Resources: High-performance computing (GPUs), cloud computing platforms.

Software: Deep learning frameworks, testing tools, version control systems.

Personnel: Researchers with expertise in deep learning, software engineering, and testing.

Data: Access to diverse and representative software project data.

This revised plan provides a more structured and comprehensive approach to researching deep learning methods for convertible test making, addressing key challenges and outlining a clear path for impactful contributions.

Deep Research

- An electrogastrogram (EGG) is a test that records the electrical activity of the stomach muscles, similar to how an electrocardiogram (ECG) records the heart’s electrical signals. It helps detect abnormalities in stomach motility, which can be useful in diagnosing conditions like gastroparesis or unexplained nausea and vomiting.

- The procedure involves placing electrodes on the abdomen to measure the stomach’s electrical rhythms before and after a meal. A normal EGG shows a consistent rhythm, while irregular patterns may indicate issues with the stomach’s muscles or nerves. The test is painless and has no known side effects.

,,

Certainly! An electrogastrogram (EGG) is a non-invasive test that measures the stomach’s electrical activity. Here’s how it’s performed:

- Preparation: You’ll be asked to wear a patient gown and lie down on a procedure table.

- Electrode Placement: Several electrodes (similar to those used in an ECG) are taped onto your abdomen to detect electrical signals from your stomach muscles.

- Baseline Recording: The test begins with a fasting recording, where your stomach’s electrical activity is measured while empty.

- Meal Consumption: After about an hour, you’ll be given a small meal or liquid to observe how your stomach responds to digestion.

- Post-Meal Recording: The test continues as the electrodes record changes in stomach activity after eating.

- Completion: The entire process takes about two hours, and once finished, the electrodes are removed, and you can go home.

,,

The results of an electrogastrogram (EGG) help assess the stomach’s electrical activity and its ability to regulate digestion. Here’s how they are typically interpreted:

- Normal Results: A healthy stomach generates regular electrical rhythms at about three cycles per minute. After eating, the electrical activity should increase, indicating proper muscle contractions for digestion.

- Bradygastria (Slow Waves): If the stomach’s electrical activity is slower than normal, it may suggest gastroparesis or delayed gastric emptying, leading to symptoms like bloating and nausea.

- Tachygastria (Fast Waves): If the electrical activity is faster than normal, it could indicate functional dyspepsia or other motility disorders.

- Irregular Patterns: An inconsistent rhythm or lack of post-meal increase in electrical activity may suggest neuromuscular dysfunction, meaning the stomach muscles or nerves aren’t working properly.

Doctors use EGG results alongside other tests, like gastric emptying studies, to diagnose digestive disorders more accurately.

,,

first i need check ECG for first assignment to test the server program like beta role coast.

#include

int main() {

// Python 초기화

Py_Initialize();

// Python 스크립트 실행

PyRun_SimpleString("import tensorflow as tf");

PyRun_SimpleString("from tensorflow.keras.models import load_model");

PyRun_SimpleString("model = load_model('model.h5')");

PyRun_SimpleString("predictions = model.predict([some_input_data])");

// Python 종료

Py_Finalize();

return 0;

}

An electrogastrogram (EGG) is primarily used to assess gastric motility disorders by measuring the stomach’s electrical activity. While it doesn’t provide a definitive diagnosis on its own, it helps identify abnormalities that may indicate certain conditions. Some of the diseases and disorders that EGG can help diagnose include:

- Gastroparesis ? A condition where the stomach empties food too slowly, often causing nausea, bloating, and vomiting.

- Functional Dyspepsia ? Chronic indigestion without an identifiable structural cause, often linked to abnormal gastric rhythms.

- Gastric Dysrhythmias ? Irregular electrical activity in the stomach, which can contribute to symptoms like early satiety, bloating, and discomfort.

- Post-Surgical Gastric Dysfunction ? Some patients experience altered gastric motility after surgeries like gastric bypass or vagotomy.

- Chronic Nausea and Vomiting Syndromes ? EGG can help determine whether these symptoms are related to abnormal stomach electrical activity.

- Diabetic Gastropathy ? Diabetes can affect the nerves controlling stomach muscles, leading to delayed gastric emptying.

- Irritable Bowel Syndrome (IBS) with Gastric Involvement ? Some IBS patients experience upper GI symptoms that may be linked to gastric dysrhythmias.

EGG is often used alongside other tests, such as gastric emptying studies and manometry, to provide a more comprehensive diagnosis.

A deep learning model can be highly effective for the analysis of Electrogastrography (EGG) paper work, offering several advantages over traditional methods. Here's a breakdown of how such a model can be structured and the potential benefits:

Potential Tasks for a Deep Learning Model in EGG Analysis:

* Automated Feature Extraction:'자동화된 특징 추출(Automated Feature Extraction)은 머신러닝과 딥러닝에서 중요한 개념으로, 원시 데이터를 분석하여 의미 있는 특징을 자동으로 식별하고 추출하는 과정입니다. 이를 통해 모델의 성능을 향상시키고, 데이터의 차원을 줄여 계산 비용을 절감할 수 있습니다.

자동화된 특징 추출의 주요 개념

신호 및 이미지 처리: 신호 및 이미지 데이터에서 중요한 패턴을 자동으로 감지하여 특징을 추출합니다. 예를 들어, CNN(Convolutional Neural Network)은 이미지에서 엣지, 텍스처, 모양 등의 특징을 자동으로 학습합니다.

자연어 처리(NLP): 텍스트 데이터에서 의미 있는 단어 또는 문장 구조를 자동으로 추출하여 모델이 학습할 수 있도록 합니다. 예를 들어, Word Embedding 기법을 사용하여 단어 간의 관계를 벡터로 변환할 수 있습니다.

시계열 데이터 분석: 금융, 의료, 센서 데이터 등에서 중요한 패턴을 자동으로 감지하여 예측 모델을 개선합니다. 예를 들어, 웨이블릿 변환(Wavelet Transform)과 같은 기법을 활용하여 시계열 데이터의 특징을 추출할 수 있습니다.

자동화된 특징 추출의 장점

수작업 없이 특징을 자동으로 학습하여 데이터 분석 속도를 높일 수 있습니다.

고차원 데이터를 저차원으로 변환하여 계산 비용을 줄이고 모델의 성능을 향상시킵니다.

노이즈 제거 및 데이터 정제를 통해 더욱 신뢰할 수 있는 분석 결과를 제공합니다.

자동화된 특징 추출은 다양한 분야에서 활용되며, 특히 딥러닝 모델의 성능을 극대화하는 데 중요한 역할을 합니다.' Deep learning models, particularly Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), can automatically learn relevant features from raw EGG signals, eliminating the need for manual feature engineering. This can capture complex patterns that might be missed by traditional methods like frequency domain analysis.

* Noise Reduction and Artifact Removal:'노이즈 감소(Noise Reduction)와 아티팩트 제거(Artifact Removal)는 신호 처리 및 데이터 분석에서 중요한 과정입니다. 특히 의료 데이터, 이미지 처리, 음성 인식 및 생체 신호 분석에서 노이즈와 아티팩트를 효과적으로 제거하는 것이 필수적입니다.

노이즈 감소(Noise Reduction)

노이즈는 원본 신호에 포함된 불필요한 변동 요소로, 데이터의 정확성을 저하시킬 수 있습니다. 노이즈를 줄이는 방법에는 다음과 같은 기법이 있습니다:

필터링(Filter Techniques): 저역통과 필터(Low-pass filter), 고역통과 필터(High-pass filter), 대역통과 필터(Band-pass filter) 등을 사용하여 특정 주파수 대역의 노이즈를 제거합니다.

웨이블릿 변환(Wavelet Transform): 신호를 여러 스케일로 분해하여 노이즈를 제거하는 기법으로, 의료 신호 분석에서 많이 사용됩니다.

적응형 필터(Adaptive Filtering): 신호의 특성을 실시간으로 분석하여 최적의 필터링을 수행하는 방법입니다.

아티팩트 제거(Artifact Removal)

아티팩트는 신호에 포함된 원치 않는 변형 요소로, 데이터 분석의 정확성을 저해할 수 있습니다. 아티팩트를 제거하는 방법에는 다음과 같은 기법이 있습니다:

독립 성분 분석(Independent Component Analysis, ICA): 여러 신호가 혼합된 경우, 원본 신호를 분리하여 아티팩트를 제거하는 기법입니다.

기계 학습 기반 제거(Deep Learning-based Artifact Removal): 딥러닝 모델을 활용하여 신호에서 아티팩트를 자동으로 감지하고 제거하는 방법입니다. 특히 MRI 이미지에서 노이즈와 아티팩트를 제거하는 연구가 진행되고 있습니다.

신호 복원(Reconstruction Methods): 손상된 신호를 복원하는 기법으로, 의료 영상 및 생체 신호 분석에서 활용됩니다.

이러한 기법들은 다양한 분야에서 활용되며, 특히 의료 데이터 분석에서 중요한 역할을 합니다.' Deep learning models can be trained to identify and filter out various types of noise and artifacts (e.g., respiratory interference, motion artifacts) present in EGG signals, leading to cleaner and more reliable data.

* Disease Classification and Diagnosis: By training on labeled EGG data from healthy individuals and patients with various gastrointestinal (GI) disorders, a deep learning model can learn to classify different conditions based on their EGG patterns. This could aid in the diagnosis of motility disorders, functional dyspepsia, nausea, and other GI issues.

* Prediction of Gastrointestinal State: Models can be developed to predict different functional states of the GI system (e.g., fasting, postprandial, stimulated) based on the EGG signal.

* Personalized Medicine: With sufficient data, deep learning models could potentially learn subtle individual variations in EGG signals and contribute to more personalized diagnoses and treatment strategies.

* High-Resolution EGG Analysis: Deep learning is well-suited for analyzing the large amounts of data generated by high-resolution EGG (HR-EGG) systems, extracting spatial and temporal patterns related to gastric slow wave propagation.

* Anomaly Detection: Models can be trained to identify unusual or abnormal patterns in EGG signals that might indicate a problem even if it doesn't fit neatly into a specific diagnostic category.

Types of Deep Learning Models Suitable for EGG Analysis:

* Convolutional Neural Networks (CNNs): CNNs are excellent for learning spatial hierarchies in data. In the context of EGG, 1D CNNs can be used to analyze the temporal patterns and local features within the signal. They can automatically learn relevant frequency and morphological characteristics.

* Recurrent Neural Networks (RNNs), especially LSTMs,LSTM(Long Short-Term Memory)은 순환신경망(RNN)의 한계를 극복하기 위해 개발된 신경망 모델입니다. 기존 RNN은 긴 시퀀스를 학습할 때 기울기 소실(Gradient Vanishing) 문제로 인해 장기 의존성을 학습하기 어려웠습니다. 이를 해결하기 위해 LSTM은 셀 상태(Cell State)와 게이트(Gate) 메커니즘을 도입하여 중요한 정보를 오랫동안 유지할 수 있도록 설계되었습니다,and GRUs: RNNs are designed to handle sequential data and can capture temporal dependencies in EGG signals. LSTMs (Long Short-Term Memory) and GRUs (Gated Recurrent Units) are particularly good at learning long-range dependencies, which can be important in analyzing the evolving patterns of gastric electrical activity.

* Hybrid Models (e.g., CNN-RNN): Combining CNNs for feature extraction and RNNs for temporal modeling can leverage the strengths of both architectures and potentially lead to improved performance.

* Autoencoders: These can be used for unsupervised feature learning and anomaly detection by learning a compressed representation of normal EGG signals. Deviations from this normal representation can then be flagged as potential abnormalities.

Workflow for Developing a Deep Learning Model for EGG Analysis:

* Data Collection and Preprocessing:

* Gather a large and diverse dataset of EGG recordings from healthy individuals and patients with various GI conditions.

* Preprocess the data to remove noise and artifacts (e.g., using filtering techniques or specialized artifact removal algorithms).

* Normalize or standardize the data to ensure consistent input scales for the model.

* Segment the EGG recordings into appropriate time windows for analysis.

* Data Labeling:

* Accurately label the EGG segments based on the corresponding clinical information (e.g., diagnosis, physiological state). This is crucial for supervised learning tasks like classification.

* Model Selection and Design:

* Choose an appropriate deep learning architecture (e.g., 1D CNN, LSTM, CNN-LSTM) based on the specific task and the characteristics of the EGG data.

* Design the model architecture, including the number of layers, filter sizes, kernel sizes, activation functions, and other hyperparameters.

* Training the Model:

* Split the labeled data into training, validation, and testing sets.

* Train the deep learning model on the training data using an optimization algorithm (e.g., Adam, SGD) and a suitable loss function (e.g., cross-entropy for classification, mean squared error for regression).

* Use the validation set to tune the model's hyperparameters and prevent overfitting.

* Evaluation:

* Evaluate the trained model's performance on the unseen test set using appropriate metrics (e.g., accuracy, sensitivity, specificity, AUC for classification; mean absolute error, root mean squared error for regression).

* Compare the performance of the deep learning model with traditional EGG analysis methods.

* Interpretation and Explainability (Optional but Important):

* Explore techniques to understand what features the deep learning model has learned from the EGG signals that are important for its predictions. This can help build trust in the model and potentially provide new insights into the underlying physiological mechanisms. Techniques like attention mechanisms or visualization of convolutional filters can be useful.

* Deployment and Clinical Integration:

* Develop a system for deploying the trained model in a clinical setting.

* Integrate the model with existing EGG recording and analysis workflows.

* Conduct clinical validation studies to assess the model's real-world performance and impact.

Advantages of Using Deep Learning for EGG Analysis:

* Automatic Feature Learning: Reduces the need for expert knowledge in manual feature extraction.

* Handling Complex Patterns: Can capture non-linear and intricate relationships in the EGG signal.

* Robustness to Noise: Can be trained to be more resilient to noise and artifacts compared to traditional methods.

* Improved Accuracy: Has the potential to achieve higher accuracy in classification and prediction tasks.

* Analysis of High-Dimensional Data: Well-suited for analyzing multi-channel HR-EGG data.

Challenges and Considerations:

* Data Requirements: Deep learning models typically require large amounts of labeled data for effective training. Obtaining sufficient and high-quality EGG datasets can be challenging.

* Interpretability: Deep learning models can be "black boxes," making it difficult to understand why they make certain predictions. This can be a concern in clinical applications where interpretability is often desired.

* Generalizability: Models trained on data from one population or using a specific EGG recording system might not generalize well to other populations or systems.

* Computational Resources: Training deep learning models can be computationally intensive, requiring significant processing power and time.

* Standardization: Lack of standardization in EGG recording protocols and data formats can hinder the development of robust and generalizable deep learning models.

Conclusion:

Deep learning offers significant promise for advancing the analysis of EGG paper work. By automating feature extraction, handling complex patterns, and potentially improving diagnostic accuracy, these models can contribute to a better understanding of gastrointestinal physiology and the development of more effective diagnostic and therapeutic strategies for GI disorders. However, addressing the challenges related to data availability, interpretability, and generalizability is crucial for realizing the full potential of deep learning in this field.

Here's a basic deep learning code example for Electrogastrography (EGG) signal analysis using Python and TensorFlow/Keras. This assumes you're working with preprocessed EGG data (e.g., after filtering, downsampling, and segmentation into windows) and trying to classify it (e.g., normal vs. abnormal gastric rhythms).

Mock Medical Education Eligibility Test Generation using Deep Learning: A Conceptual Framework

Abstract: The increasing demand for fair and efficient evaluation in medical education necessitates innovative approaches to test creation. This paper proposes a conceptual framework for leveraging deep learning models to generate mock Medical Education Eligibility Test (MEET) questions. We explore various deep learning architectures and methodologies that can be employed to create diverse, relevant, and challenging questions, potentially enhancing the quality and efficiency of test preparation. This framework addresses key aspects of question generation, including content understanding, question type variation, difficulty control, and evaluation metrics.

Keywords: Deep Learning, Medical Education, Eligibility Test, Question Generation, Natural Language Processing.

1. Introduction

Standardized eligibility tests play a crucial role in evaluating the knowledge and aptitude of aspiring medical professionals. The Medical Education Eligibility Test (MEET), in particular, assesses a broad range of scientific knowledge and critical thinking skills essential for success in medical studies. However, the manual creation of high-quality test questions is a time-consuming and resource-intensive process.

Deep learning, with its remarkable advancements in natural language processing (NLP) and sequence generation, offers a promising avenue for automating and enhancing the test generation process. By training on a large corpus of medical textbooks, research papers, and past examination materials, deep learning models can learn the underlying patterns and structures of medical knowledge, enabling them to generate novel and relevant test questions.

This paper outlines a conceptual framework for developing a deep learning-based system for generating mock MEET questions. We will discuss the key components of such a system, including data acquisition and preprocessing, model selection and training, question generation strategies, and evaluation metrics.

2. Related Work

The application of artificial intelligence (AI), including machine learning and deep learning, in medical education is an increasingly active area of research. Several studies have explored the use of AI for tasks such as automated grading, personalized learning, and curriculum design.

In the realm of question generation, various approaches have been investigated, including rule-based methods, statistical techniques, and more recently, deep learning models. Deep learning models, particularly sequence-to-sequence architectures like Recurrent Neural Networks (RNNs) and Transformers, have shown significant potential in generating natural language text, including questions.

Specifically, research has explored the use of deep learning for generating questions in other domains, such as general knowledge and specific academic subjects. These studies have demonstrated the ability of models to generate diverse question types, control question difficulty, and align questions with specific learning x-x-x-x-x-x-x-x-objectives.

3. Proposed Framework

Our proposed framework for mock MEET question generation using deep learning consists of the following key stages:

3.1 Data Acquisition and Preprocessing:

* Data Sources: A comprehensive dataset of medical text is crucial for training the deep learning model. This can include:

* Medical textbooks and reference materials

* Research articles and publications (e.g., from PubMed)

* Past MEET question papers and answer keys

* Curriculum guidelines and learning x-x-x-x-x-x-x-x-objectives

* Data Preprocessing: The acquired data needs to be preprocessed to ensure its suitability for training. This may involve:

* Text cleaning (removing irrelevant characters, HTML tags, etc.)

* Tokenization (splitting text into individual words or sub-word units)

* Vocabulary creation (building a mapping of unique tokens)

* Data augmentation (e.g., paraphrasing existing questions to create variations)

3.2 Model Selection and Training:

* Model Architectures: Several deep learning architectures can be considered for question generation:

* Recurrent Neural Networks (RNNs): LSTMs and GRUs can process sequential data and generate text step-by-step.

* Transformers: Models like BERT, GPT-2, and their variants excel at capturing long-range dependencies and generating coherent text. Fine-tuning pre-trained language models on the medical text corpus can be particularly effective.

* Sequence-to-Sequence Models with Attention: These models can learn to map input context (e.g., a medical concept) to an output sequence (the question).

* Training Process: The chosen model will be trained on the preprocessed medical text data using appropriate training x-x-x-x-x-x-x-x-objectives. For question generation, this typically involves maximizing the likelihood of the target question given some input context (e.g., a sentence from a textbook).

* Contextual Input: The model can be conditioned on various types of input to control the generated questions:

* Keywords/Concepts: Providing specific medical terms or concepts can guide the model to generate questions related to those topics.

* Difficulty Level: Incorporating a difficulty level as an input can influence the complexity of the generated questions.

* Question Type: Specifying the desired question format (e.g., multiple-choice, true/false) can guide the model's output.

3.3 Question Generation Strategies:

* Fill-in-the-Blank Generation: Identifying key terms in a sentence and replacing them with blanks.

* Multiple-Choice Question Generation:

* Generating a plausible correct answer based on the input context.

* Generating plausible and challenging distractors (incorrect options) that are related to the correct answer or common misconceptions.

* True/False Question Generation: Creating statements that are either directly true or can be made false by altering a key detail.

* Reasoning-Based Question Generation: Generating questions that require the application of medical knowledge and critical thinking skills to solve a scenario or interpret data.

3.4 Evaluation Metrics:

Evaluating the quality of the generated mock MEET questions is crucial. Several metrics can be employed:

* Natural Language Processing Metrics:

* BLEU (Bilingual Evaluation Understudy): Measures the similarity between the generated question and a set of reference questions.

* ROUGE (Recall-Oriented Understudy for Gisting Evaluation): Evaluates the overlap of n-grams between the generated and reference text, focusing on recall.

* Perplexity: Measures the model's uncertainty in predicting the next word in a sequence (lower is better).

* Medical Domain-Specific Metrics:

* Relevance: Assessing whether the generated question is relevant to the medical domain and the scope of the MEET. This can be done through expert evaluation.

* Correctness: Ensuring that the generated question has a clear and unambiguous correct answer.

* Difficulty: Evaluating the cognitive demand of the question, potentially categorized by medical professionals.

* Discrimination: Assessing the ability of the question to differentiate between students with different levels of knowledge. This can be evaluated by administering the generated questions to a sample group.

* Plausibility of Distractors (for MCQs): Evaluating whether the incorrect options are plausible yet clearly wrong.

4. Potential Benefits and Challenges

4.1 Potential Benefits:

* Increased Efficiency: Automating the question generation process can significantly reduce the time and effort required to create mock MEET papers.

* Enhanced Diversity: Deep learning models can generate a wider variety of question types and cover a broader range of topics compared to manual creation.

* Improved Quality: By learning from a large dataset, models can potentially generate questions that are more relevant, challenging, and aligned with the curriculum.

* Personalized Learning: The system could be adapted to generate questions tailored to individual students' learning needs and weaknesses.

* Reduced Bias: Automated generation can potentially mitigate biases that might inadvertently creep into manually created questions.

4.2 Challenges:

* Data Scarcity and Quality: Obtaining a sufficiently large and high-quality dataset of medical text and past MEET questions can be challenging.

* Ensuring Medical Accuracy: The generated questions must be medically accurate and free from errors. This requires careful model training and validation.

* Generating High-Order Thinking Questions: Creating questions that assess critical thinking, problem-solving, and clinical reasoning is more complex than generating factual recall questions.

* Controlling Question Difficulty and Relevance: Precisely controlling the difficulty and ensuring the relevance of generated questions to the MEET syllabus requires sophisticated techniques.

* Evaluation Complexity: Developing robust evaluation metrics that capture the nuances of question quality in the medical domain is an ongoing challenge.

* Ethical Considerations: Ensuring fairness and avoiding the generation of biased or misleading questions is crucial.

5. Conclusion and Future Directions

This paper presents a conceptual framework for utilizing deep learning models to generate mock Medical Education Eligibility Test questions. By leveraging the power of NLP and sequence generation, this approach holds the potential to enhance the efficiency, diversity, and potentially the quality of test preparation materials.

Future research should focus on:

* Experimenting with different deep learning architectures and training strategies for medical question generation.

* Developing effective methods for controlling question difficulty, type, and relevance.

* Creating robust evaluation metrics that incorporate expert medical knowledge.

* Investigating techniques for generating higher-order thinking questions and clinical reasoning scenarios.

* Exploring the integration of this technology into personalized learning platforms for medical students.

By addressing the challenges and pursuing these future directions, deep learning can become a valuable tool in medical education, contributing to more effective and efficient assessment and preparation for crucial eligibility examinations like the MEET.

coding..

1. Install Required Libraries

pip install transformers datasets torch

---

2. Prepare the Dataset Format

The dataset should be in a format like this (CSV or JSON):

context,question

"The pancreas secretes insulin to regulate blood sugar levels.","What hormone does the pancreas secrete to regulate blood sugar?"

---

3. Fine-Tuning GPT-2 for Question Generation

from transformers import GPT2Tokenizer, GPT2LMHeadModel, Trainer, TrainingArguments, TextDataset, DataCollatorForLanguageModeling

import torch

# Load GPT-2 tokenizer and model

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

model = GPT2LMHeadModel.from_pretrained("gpt2")

# Add special tokens

tokenizer.add_special_tokens({'pad_token': '[PAD]'})

model.resize_token_embeddings(len(tokenizer))

# Prepare dataset (assumes a text file with lines like:

def load_dataset(file_path, tokenizer, block_size=128):

return TextDataset(

tokenizer=tokenizer,

file_path=file_path,

block_size=block_size,

)

# Data collator for language modeling

def get_collator(tokenizer):

return DataCollatorForLanguageModeling(

tokenizer=tokenizer,

mlm=False,

)

# Load data

train_dataset = load_dataset("medical_questions_train.txt", tokenizer)

data_collator = get_collator(tokenizer)

# Training arguments

training_args = TrainingArguments(

output_dir="./gpt2-medical-qgen",

overwrite_output_dir=True,

num_train_epochs=3,

per_device_train_batch_size=2,

save_steps=500,

save_total_limit=2,

)

# Trainer

trainer = Trainer(

model=model,

args=training_args,

data_collator=data_collator,

train_dataset=train_dataset,

)

# Train the model

trainer.train()

---

4. Inference (Generating Questions)

def generate_question(context):

prompt = f"{context}

inputs = tokenizer(prompt, return_tensors="pt")

---

1. Prerequisites

pip install numpy pandas matplotlib scikit-learn tensorflow

---

2. Sample Code: EGG Signal Classification using CNN

import numpy as np

import pandas as pd

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv1D, MaxPooling1D, Flatten, Dense, Dropout, BatchNormalization

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelEncoder

from tensorflow.keras.utils import to_categorical

# Load your EGG dataset (replace with your actual file or array)

# Example: EGG signals in shape (samples, timepoints), labels in (samples,)

data = np.load("egg_signals.npy") # shape (n_samples, n_timesteps)

labels = np.load("egg_labels.npy") # shape (n_samples,)

# Encode labels (e.g., normal vs abnormal -> 0,1)

encoder = LabelEncoder()

encoded_labels = encoder.fit_transform(labels)

categorical_labels = to_categorical(encoded_labels)

# Train/test split

X_train, X_test, y_train, y_test = train_test_split(data, categorical_labels, test_size=0.2, random_state=42)

# Build CNN model

model = Sequential([

Conv1D(64, 3, activation='relu', input_shape=(X_train.shape[1], 1)),

BatchNormalization(),

MaxPooling1D(2),

Dropout(0.3),

Conv1D(128, 3, activation='relu'),

MaxPooling1D(2),

Dropout(0.3),

Flatten(),

Dense(64, activation='relu'),

Dropout(0.4),

Dense(y_train.shape[1], activation='softmax')

])

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Reshape input for Conv1D

X_train = X_train.reshape(-1, X_train.shape[1], 1)

X_test = X_test.reshape(-1, X_test.shape[1], 1)

# Train model

model.fit(X_train, y_train, epochs=30, batch_size=32, validation_split=0.2)

# Evaluate

loss, acc = model.evaluate(X_test, y_test)

print(f"Test accuracy: {acc:.2f}")

---

3. Notes

Replace egg_signals.npy and egg_labels.npy with your actual data.

You may need to preprocess EGG signals: filter (e.g., 0.05?0.15 Hz), normalize, segment into windows.

You can experiment with RNNs (e.g., LSTM) or transformers for temporal modeling if CNNs don’t work well.

Would you like me to generate synthetic EGG-like data for testing or include advanced architectures like LSTM or attention mechanisms?

This is the current plan. What changes do you want to make? Sono...

(1) Identify the primary applications and use cases of deep learning in ultrasound imaging across various domains (e.g., medical diagnostics, industrial inspection).

(2) Explore common deep learning architectures and models (e.g., CNNs, U-Nets, GANs) specifically adapted for ultrasound data analysis, including image segmentation, classification, and reconstruction.

(3) Investigate the specific challenges and limitations of applying deep learning to ultrasound data, such as data scarcity, speckle noise, and image artifacts, and proposed solutions.

(4) Research recent advancements and emerging trends in ultrasound deep learning, including novel techniques, hybrid approaches, or real-time processing methods.

(5) Examine specific medical or industrial applications where deep learning has significantly impacted ultrasound diagnostics or analysis, providing concrete examples of improved accuracy or efficiency.

(6) Identify publicly available datasets and common benchmarking methods used in ultrasound deep learning research to evaluate model performance.

(7) Find prominent research groups, institutions, or key researchers actively publishing in the field of deep learning for ultrasound.

(8) Locate highly cited review articles, surveys, or seminal papers from reputable journals and conferences focusing on deep learning in ultrasound.

- 첨부파일

- 첨부파일이(가) 없습니다.